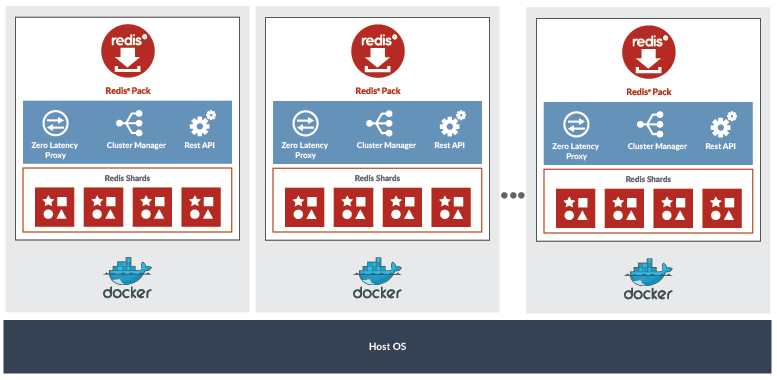

Multi-Nodes Redis Cluster With Docker

Go to the complete article here.

MapR-DB Table Replication allows data to be replicated to another table that could be on on the same cluster or in another cluster. This is different from the automatic and intra-cluster replication that copies the data into different physical nodes for high availability and prevent data loss.

This tutorial focuses on the MapR-DB Table Replication that replicates data between tables on different clusters.

Replicating data between different clusters allows you to:

Replication Topologies

MapR-DB Table Replication provides various topologies to adapt the replication to the business and technical requirements:

In this example you will learn how to setup multi-master replication.

Posted by

Tug Grall

at

1:15 AM

0

comments

![]()

MapR Ecosystem Package 2.0 (MEP) is coming with some new features related to MapR Streams:

MapR Ecosystem Packs (MEPs) are a way to deliver ecosystem upgrades decoupled from core upgrades - allowing you to upgrade your tooling independently of your Converged Data Platform. You can lean more about MEP 2.0 in this article.

In this blog we describe how to use the REST Proxy to publish and consume messages to/from MapR Streams. The REST Proxy is a great addition to the MapR Converged Data Platform allowing any programming language to use MapR Streams.

The Kafka REST Proxy provided with the MapR Streams tools, can be used with MapR Streams (default), but also used in a hybrid mode with Apache Kafka. In this article we will focus on MapR Streams. <!-- more -->

A stream is a collection of topics that you can manage as a group by:

You can find more information about MapR Streams concepts in the documentation.

On your Mapr Cluster or Sandbox, run the following commands:

$ maprcli stream create -path /apps/iot-stream -produceperm p -consumeperm p -topicperm p

$ maprcli stream topic create -path /apps/iot-stream -topic sensor-json -partitions 3

$ maprcli stream topic create -path /apps/iot-stream -topic sensor-binary -partitions 3Open two terminal windows and run the consumer Kafka utilities using the following commands:

$ /opt/mapr/kafka/kafka-0.9.0/bin/kafka-console-consumer.sh --new-consumer --bootstrap-server this.will.be.ignored:9092 --topic /apps/iot-stream:sensor-json$ /opt/mapr/kafka/kafka-0.9.0/bin/kafka-console-consumer.sh --new-consumer --bootstrap-server this.will.be.ignored:9092 --topic /apps/iot-stream:sensor-binaryThis two terminal windows will allow you to see the messages posted on the different topics

The endpoint /topics/[topic_name] allows you to get some informations about the topic. In MapR Streams, topics are part of a stream identified by a path;

to use the topic using the REST API you have to use the full path, and encode it in the URL; for example:

/apps/iot-stream:sensor-json will be encoded with %2Fapps%2Fiot-stream%3Asensor-jsonRun the following command, to get information about the sensor-json topic

$ curl -X GET http://localhost:8082/topics/%2Fapps%2Fiot-stream%3Asensor-jsonNote: For simplicity reason I am running the command from the node where the Kafka REST proxy is running, so it is possible to use localhost.

You can print JSON in a pretty way, by adding a Python command such as :

$ curl -X GET http://localhost:8082/topics/%2Fapps%2Fiot-stream%3Asensor-json | python -m json.toolDefault Stream

As mentioned above, the Stream path is part of the topic name you have to use in the command;

however it is possible to configure the MapR Kafka REST Proxy to use a default stream.

For this you should add the following property in the /opt/mapr/kafka-rest/kafka-rest-2.0.1/config/kafka-rest.properties file:

streams.default.stream=/apps/iot-stream

When you change the Kafka REST proxy configuration, you must restart the service using maprcli or MCS.

The main reason to use the streams.default.stream properties is to simplify the URLs used by the application for example

streams.default.stream you can use curl -X GET http://localhost:8082/topics/http://localhost:8082/topics/%2Fapps%2Fiot-stream%3Asensor-jsonIn this article, all the URLs contains the encoded stream name, like that you can start using the Kafka REST proxy without changind the configuration and also use it with different streams.

The Kafka REST Proxy for MapR Streams allows application to publish messages to MapR Streams. Messages could be send as JSON or Binary content (base64 encoding).

POSTapplication/vnd.kafka.json.v1+json{

"records":

[

{

"value":

{

"temp" : 10 ,

"speed" : 40 ,

"direction" : "NW"

}

}

]

}The complete request is:

curl -X POST -H "Content-Type: application/vnd.kafka.json.v1+json" \

--data '{"records":[{"value": {"temp" : 10 , "speed" : 40 , "direction" : "NW"} }]}' \

http://localhost:8082/topics/%2Fapps%2Fiot-stream%3Asensor-jsonYou should see the message printed in the terminal window where the /apps/iot-stream:sensor-json consumer is running.

POSTapplication/vnd.kafka.binary.v1+json{

"records":

[

{

"value":"SGVsbG8gV29ybGQ="

}

]

}Note that SGVsbG8gV29ybGQ= is the string "Hello World" encoded in Base64.

The complete request is:

curl -X POST -H "Content-Type: application/vnd.kafka.binary.v1+json" \

--data '{"records":[{"value":"SGVsbG8gV29ybGQ="}]}' \

http://localhost:8082/topics/%2Fapps%2Fiot-stream%3Asensor-binaryYou should see the message printed in the terminal window where the /apps/iot-stream:sensor-binary consumer is running.

The records field of the HTTP Body allows you to send multiple messages for example you can send:

curl -X POST -H "Content-Type: application/vnd.kafka.json.v1+json" \

--data '{"records":[{"value": {"temp" : 12 , "speed" : 42 , "direction" : "NW"} }, {"value": {"temp" : 10 , "speed" : 37 , "direction" : "N"} } ]}' \

http://localhost:8082/topics/%2Fapps%2Fiot-stream%3Asensor-jsonThis command will send 2 messages, and increment the offset by 2. You can do the same with binary content, just add new element in the JSON array; for example:

curl -X POST -H "Content-Type: application/vnd.kafka.binary.v1+json" \

--data '{"records":[{"value":"SGVsbG8gV29ybGQ="}, {"value":"Qm9uam91cg=="}]}' \

http://localhost:8082/topics/%2Fapps%2Fiot-stream%3Asensor-binaryAs you probably know, it is possible to set a key to a message to be sure that all the messages

with the same key will arrive in the same partition. For this, add the key attribute to the message as follow:

{

"records":

[

{

"key": "K001",

"value":

{

"temp" : 10 ,

"speed" : 40 ,

"direction" : "NW"

}

}

]

}Now that you know how to post messages to MapR Stream topics usinf the REST Proxy, let's see how to consume the messages.

The REST proxy can also be used to consume messages from topics; for this you need to:

The following request creates the consumer instance:

curl -X POST -H "Content-Type: application/vnd.kafka.v1+json" \

--data '{"name": "iot_json_consumer", "format": "json", "auto.offset.reset": "earliest"}' \

http://localhost:8082/consumers/%2Fapps%2Fiot-stream%3Asensor-jsonThe response from the server looks like:

{

"instance_id":"iot_json_consumer",

"base_uri":"http://localhost:8082/consumers/%2Fapps%2Fiot-stream%3Asensor-json/instances/iot_json_consumer"

}Note that we have used the /consumers/[topic_name] to create the consumer.

The base_uri will be used by the subsequent requests to get the messages from the topic. Like any MapR Streams/Kafka consumer the auto.offset.reset defines its behavior. In this example the value is set to earliest, this means that the consumer will read the messages from the beginning. You can find more information about the consumer configuration in the MapR Streams documentation.

To consume the messages, just add the Mapr Streams topic to the URL of the consumer isntance.

The following request consumes the messages from the topic:

curl -X GET -H "Accept: application/vnd.kafka.json.v1+json" \

http://localhost:8082/consumers/%2Fapps%2Fiot-stream%3Asensor-json/instances/iot_json_consumer/topics/%2Fapps%2Fiot-stream%3Asensor-jsonThis call returns the messages in a JSON document:

[

{"key":null,"value":{"temp":10,"speed":40,"direction":"NW"},"topic":"/apps/iot-stream:sensor-json","partition":1,"offset":1},

{"key":null,"value":{"temp":12,"speed":42,"direction":"NW"},"topic":"/apps/iot-stream:sensor-json","partition":1,"offset":2},

{"key":null,"value":{"temp":10,"speed":37,"direction":"N"},"topic":"/apps/iot-stream:sensor-json","partition":1,"offset":3}

]Each call to the API returns the new messages published, based on the offset of the last call.

Note that the Consumer will be destroyed:

consumer.instance.timeout.ms (default value set to 300000ms / 5 minutes)The approach is the same if you need to consume binary messages, you need to change the format and accept header.

Call this URL to create a consumer instance for the binary topic:

curl -X POST -H "Content-Type: application/vnd.kafka.v1+json" \

--data '{"name": "iot_binary_consumer", "format": "binary", "auto.offset.reset": "earliest"}' \

http://localhost:8082/consumers/%2Fapps%2Fiot-stream%3Asensor-binaryThen consume messages, the accept header is set to application/vnd.kafka.binary.v1+json:

curl -X GET -H "Accept: application/vnd.kafka.binary.v1+json" \

http://localhost:8082/consumers/%2Fapps%2Fiot-stream%3Asensor-binary/instances/iot_binary_consumer/topics/%2Fapps%2Fiot-stream%3Asensor-binary

This call returns the messages in a JSON document, and the value is encoded in Base64

[

{"key":null,"value":"SGVsbG8gV29ybGQ=","topic":"/apps/iot-stream:sensor-binary","partition":1,"offset":1},

{"key":null,"value":"Qm9uam91cg==","topic":"/apps/iot-stream:sensor-binary","partition":1,"offset":2}

]As mentioned before the consumer will be destroyed automatically based on the consumer.instance.timeout.ms configuration of the REST Proxy;

it is also possible to destroyed the instance using the consumer instance URI and an HTTP DELETE call, as follow:

curl -X DELETE http://localhost:8082/consumers/%2Fapps%2Fiot-stream%3Asensor-binary/instances/iot_binary_consumerIn this article you have learned how to use the Kafka REST Proxy for MapR Streams that allow any application to use messages published in the MapR Converged Data Platform.

You can find more information about the Kafka REST Proxy in the MapR documentation and the following resources:

Posted by

Tug Grall

at

10:30 AM

0

comments

![]()

MQTT (MQ Telemetry Transport) is a lightweight publish/subscribe messaging protocol. MQTT is used a lot in the Internet of Things applications, since it has been designed to run on remote locations with system with small footprint.

The MQTT 3.1 is an OASIS standard, and you can find all the information at http://mqtt.org/

This article will guide you into the various steps to run your first MQTT application:

The source code of the sample application is available on GitHub.

Apache Flink is an open source platform for distributed stream and batch data processing. Flink is a streaming data flow engine with several APIs to create data streams oriented application.

It is very common for Flink applications to use Apache Kafka for data input and output. This article will guide you into the steps to use Apache Flink with Kafka.

Get an introduction to streaming analytics, which allows you real-time insight from captured events and big data. There are applications across industries, from finance to wine making, though there are two primary challenges to be addressed.

Did you know that a plane flying from Texas to London can generate 30 million data points per flight? As Jim Daily of GE Aviation notes, that equals 10 billion data points in one year. And we’re talking about one plane alone. So you can understand why another top GE executive recently told Ericsson Business Review that "Cloud is the future of IT," with a focus on supporting challenging applications in industries such as aviation and energy.

Posted by

Tug Grall

at

3:30 PM

0

comments

![]()